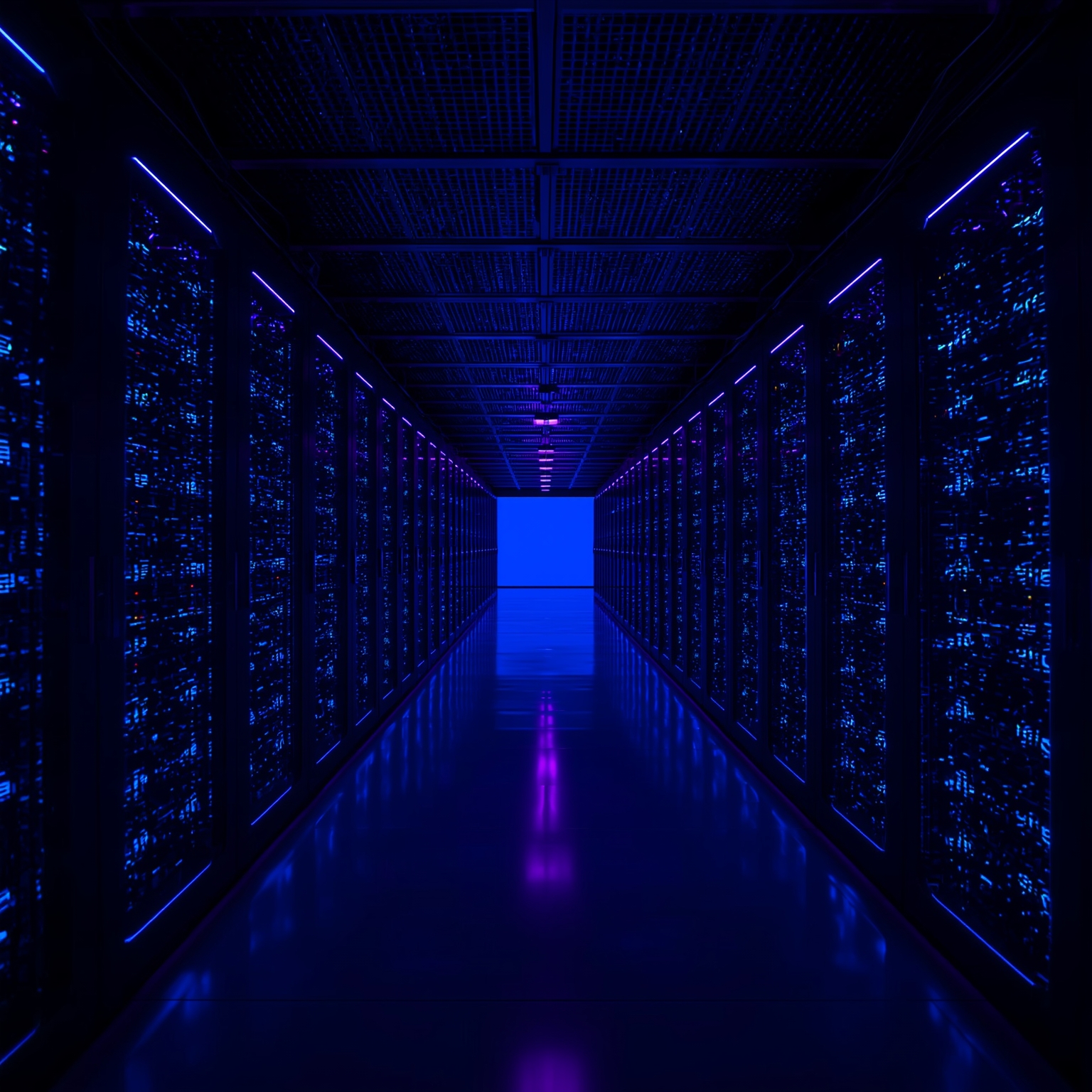

On Thursday, Microsoft CEO Satya Nadella shared a video through his official social media account that captured a major milestone in the company’s technological evolution: the unveiling of Microsoft’s first fully deployed large-scale artificial intelligence system—an immense computational network that Nvidia prefers to describe as an AI “factory.” Nadella framed this launch not as an isolated achievement but as the initial stage in a sweeping global rollout, pledging that many more of these Nvidia-powered AI factories will soon be distributed throughout Microsoft Azure’s extensive cloud infrastructure. These next-generation facilities are designed specifically to run the demanding workloads of OpenAI and similar partners, establishing Microsoft as a central engine in the rapidly expanding AI ecosystem.

Each of these advanced systems functions as a massive cluster composed of more than 4,600 Nvidia GB300 rack-mounted computing units. At the heart of these machines lies the Blackwell Ultra GPU, one of Nvidia’s most coveted and power-efficient chips, engineered to handle the staggering computational intensity of cutting-edge AI training and inference. These GPUs are linked together using Nvidia’s lightning-fast InfiniBand networking technology, enabling near-instantaneous data transfer among nodes—an essential capability for massive-scale model training. It is worth recalling that Nvidia’s CEO, Jensen Huang, foresaw the strategic significance of acquiring this critical networking platform when his company purchased Mellanox in 2019 for $6.9 billion. That decision provided Nvidia not only with AI chips but also with the foundational networking backbone required for high-performance cloud-scale computing.

Microsoft has declared its intent to deploy “hundreds of thousands” of these Blackwell Ultra GPUs across its worldwide data centers as it continues this global expansion. The enormous scale of these installations captures attention not only because of their hardware specifications but also because of their broader implications for the future of AI development. The announcement arrived at a particularly timely moment, coming on the heels of major data center agreements between OpenAI—Microsoft’s closest collaborator and, at times, competitive rival—and leading chip manufacturers Nvidia and AMD. OpenAI is reportedly committing as much as one trillion dollars toward constructing its own AI data centers by 2025, reflecting the monumental resources required to sustain the next generation of AI systems. Its CEO, Sam Altman, has also indicated that this is only the beginning, with additional facilities planned in the near future.

By contrast, Microsoft’s recent announcement makes a clear strategic statement: unlike its rivals, the company already possesses a vast operational infrastructure comprising more than 300 data centers spread across 34 countries. Microsoft emphasizes that these are not merely data facilities but a globally unified cloud platform “uniquely positioned” to meet the immense computational and storage demands of advanced frontier AI. Within these environments, the company asserts, future AI models containing hundreds of trillions of parameters—a scale once thought unreachable—will be trained, tested, and deployed. This combination of readiness, scale, and specialized hardware integration gives Microsoft a considerable advantage in accelerating AI innovation.

Technology enthusiasts and developers will likely hear additional insights later this month, as Microsoft’s Chief Technology Officer, Kevin Scott, is scheduled to speak at TechCrunch Disrupt in San Francisco, taking place from October 27 to October 29. His presentation is expected to delve deeper into how Microsoft intends to expand its AI infrastructure, manage global workloads efficiently, and continue refining Azure’s capacity to support the largest and most sophisticated AI models in existence.

Sourse: https://techcrunch.com/2025/10/09/while-openai-races-to-build-ai-data-centers-nadella-reminds-us-that-microsoft-already-has-them/