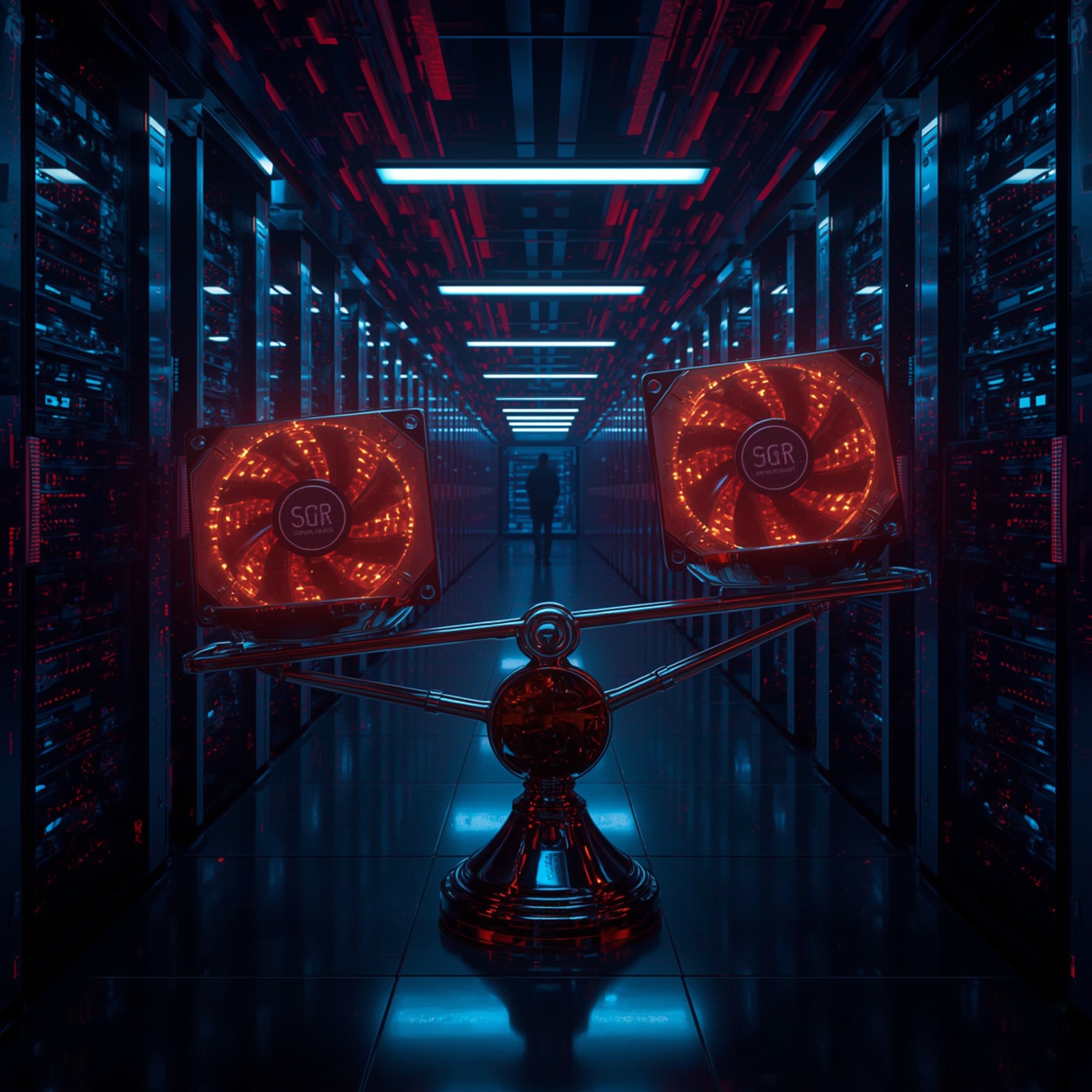

OpenAI’s president, Greg Brockman, offered a candid glimpse into one of the company’s most difficult internal challenges: deciding which teams within the organization receive access to the highly coveted graphic processing units, or GPUs. He described this allocation process as an exercise in what he called ‘pain and suffering,’ underscoring not only the technical complexity but also the emotional strain that accompanies such high-stakes decision-making. These comments came during his appearance on the ‘Matthew Berman’ podcast, released on Thursday, where Brockman elaborated on how managing the distribution of computing resources—essential to virtually every part of OpenAI’s operations—demands constant judgment calls, emotional resilience, and strategic finesse.

Brockman explained that overseeing GPU allocation is far more than a logistical task; it is an emotionally charged and mentally exhausting undertaking. The difficulty, he noted, arises from the abundance of extraordinary research proposals and innovative ideas emerging within the company. Each new project pitch presents an exciting opportunity for advancement, making it exceptionally challenging to turn down requests for additional computing power. “It’s so difficult,” he remarked, “because you see all these brilliant initiatives, and then another equally impressive idea arrives, and you can’t help but think: yes, that one truly deserves resources as well.” His sentiment captures the tension between enthusiasm for innovation and the harsh reality of finite computational capacity.

To handle these competing demands, Brockman described how OpenAI structurally divides its computing power between two major operational domains: the research division and the applied product teams. Within the research side, decisions about how GPUs are distributed among ongoing scientific projects rest with the company’s chief scientist and head of research, who assess which initiatives most merit the hardware needed for breakthroughs. At a higher strategic level, OpenAI’s senior leadership, which includes CEO Sam Altman and the company’s CEO of applications, Fidji Simo, determines the broader balance between research and applied computing needs, ensuring that both innovation and practical product development continue to progress in tandem.

At the ground level, a small, tightly coordinated internal operations group manages the ongoing reallocation and reassignment of GPUs as projects evolve. Among them, Kevin Park plays a key role in redistributing hardware resources once teams complete or wind down their initiatives. Brockman described this process almost like an internal marketplace of GPUs: when one group approaches Park requesting, for example, “We need this many additional GPUs for a new project that just began,” he must respond by identifying other programs that are tapering off and can therefore release their unused hardware. The constant juggling of resources reflects both the efficiency and the tension inherent in managing such a scarce yet vital commodity.

This internal ‘GPU shuffle,’ as Brockman called it, mirrors a much larger issue that OpenAI has been vocal about for months: the global shortage of the very computing infrastructure that fuels the next generation of artificial intelligence. The availability of GPUs dictates productivity across teams, and as Brockman pointedly emphasized, the stakes could not be higher. “People really care,” he said, describing how emotions often run high when teams learn whether they will receive the computing power they requested. According to him, the passion and anxiety surrounding these allocation decisions are impossible to overstate because, for OpenAI’s researchers and engineers, securing compute resources can be the difference between stalling and achieving a major breakthrough.

Neither Brockman nor OpenAI provided additional comments when contacted by Business Insider. Yet the dynamic he described fits into a larger narrative shaping the technology industry: the global race for GPUs. OpenAI has repeatedly stressed its unrelenting appetite for more computational power, framing it as both a technological necessity and a constraint on innovation. As chief product officer Kevin Weil explained on the ‘Moonshot’ podcast in August, every time new GPUs arrive, they are consumed almost instantly. He likened the situation to the early days of online video, noting that as increasing network bandwidth made video streaming possible, more bandwidth immediately led to more content creation and consumption. Similarly, he said, “the more GPUs we have, the more AI everyone will use.”

Sam Altman, OpenAI’s CEO, echoed this perspective in recent remarks, revealing that the company is preparing to launch what he described as ‘new compute-intensive offerings.’ Because of the immense costs associated with these initiatives, certain features will initially be restricted to paying Pro subscribers, and some upcoming products may include additional usage fees. Altman characterized this phase as a deliberate experiment—one designed to explore the outer limits of what can be achieved when vast computational resources are directed toward novel and ambitious ideas. “We want to discover what becomes possible,” he wrote on X, “when we push today’s models to their limits using the full extent of available compute.”

OpenAI is not alone in this pursuit. Other major technology players have openly acknowledged their escalating need for GPU infrastructure. For instance, Meta’s CEO, Mark Zuckerberg, remarked on the ‘Access’ podcast that his company views ‘compute per researcher’ as an emerging competitive advantage. By outspending competitors on GPUs and on the custom, large-scale systems required to run them effectively, Meta aims to empower its research teams to explore AI frontiers with fewer constraints.

Together, these insights from Brockman, Altman, and their peers across the tech sector reveal a defining theme of the current AI era: access to computational power has become as valuable and contested as the innovations it enables. For OpenAI, the internal struggle to allocate GPUs is not merely an operational concern but a reflection of the broader tension shaping modern artificial intelligence—how to balance creativity, ambition, and scarcity in the relentless pursuit of progress.

Sourse: https://www.businessinsider.com/openai-president-allocate-gpu-compute-internally-greg-brockman-2025-10