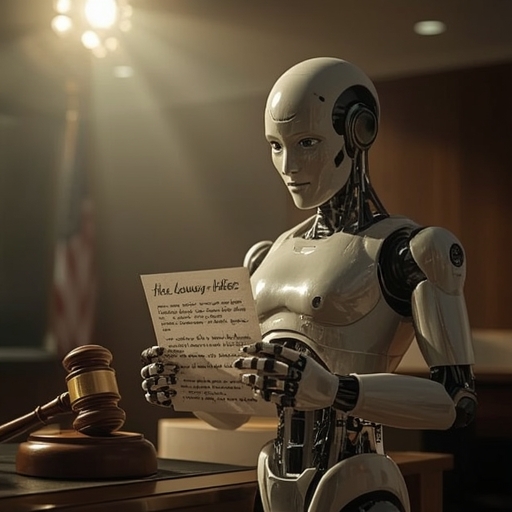

In a remarkable legal scene that seems to belong equally to a courtroom and to a science fiction novel, a new ethical dilemma unfolds: can artificial intelligence truly feel and express remorse? During a contentious trial, a defendant presented an apology not written by human hand but generated by an advanced AI language model. The judge, however, dismissed it as hollow—an imitation devoid of emotion and personal accountability. This moment forces society to reckon with complex questions at the intersection of technology, emotion, and morality.

At the heart of the issue lies the concept of authenticity in communication. When an algorithm crafts language that simulates empathy, do the words carry the moral weight intrinsic to human experience? Sincerity has long depended on an individual’s will to take responsibility for harm caused—an act rooted in conscience, vulnerability, and a desire to restore trust. Yet an AI system, no matter how sophisticated, cannot feel guilt or aspire to redemption; it can merely predict which words will most plausibly convey regret. The emotional cadence, grammatical precision, and contextual nuance that make an apology sound human are computationally modeled, not heartfelt.

Still, the counterargument persists: if an apology’s linguistic form persuades a listener, does the origin matter? We already rely on mediated communications—press releases written by teams, legal statements crafted by counsel, and automated customer responses that seek to soothe complaints. The pivotal distinction is purpose and accountability. While these human-created messages may be strategic, they remain tied to individuals who bear moral or legal responsibility. An AI-generated apology, by contrast, obscures the link between expression and the ethical actor behind it. It transforms remorse into performance, detaching the sentiment from its human source.

The courtroom’s rejection of this automated confession represents more than judicial skepticism; it symbolizes society’s demand that moral responsibility must remain humanly grounded. Technology can simulate empathy, but empathy’s essence cannot be downloaded or delegated. As artificial intelligence increasingly mediates how we write, speak, and even feel, humanity must decide which emotional truths remain sacred and inalienable. The question, then, is not only whether AI can seem sorry, but whether we are willing to accept imitation as equivalent to sincerity. When words lose the weight of emotion, what becomes of justice, forgiveness, and the fragile bonds that hold our social fabric together?

Sourse: https://gizmodo.com/does-sorry-count-when-ai-writes-it-for-you-2000723176